The Workshop on Information-Theoretic Analyses of Natural Languages was held as part of the DGFS Annual Meeting, Tübingen (Germany), 22 February 2022.

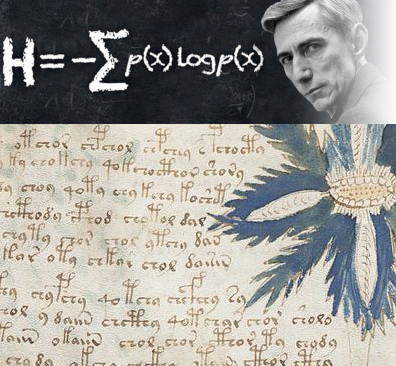

Summary: Languages transmit information. They are used to send messages across meters, kilometers, and around the globe. To better understand their information carrying potential, we can harness information theory. This workshop, firstly, gives a brief introduction to the conceptual underpinnings of information-theoretic measures such as entropy, conditional entropy, and mutual information. Secondly, some problems, pitfalls, and possible solutions for their estimation are discusses. Thirdly, we give some hands-on exercises for using these measures in research on natural languages.

Instructors

Christian Bentz

Ximena Gutierrez-Vasques

All the data, code, and slides can be found on github.